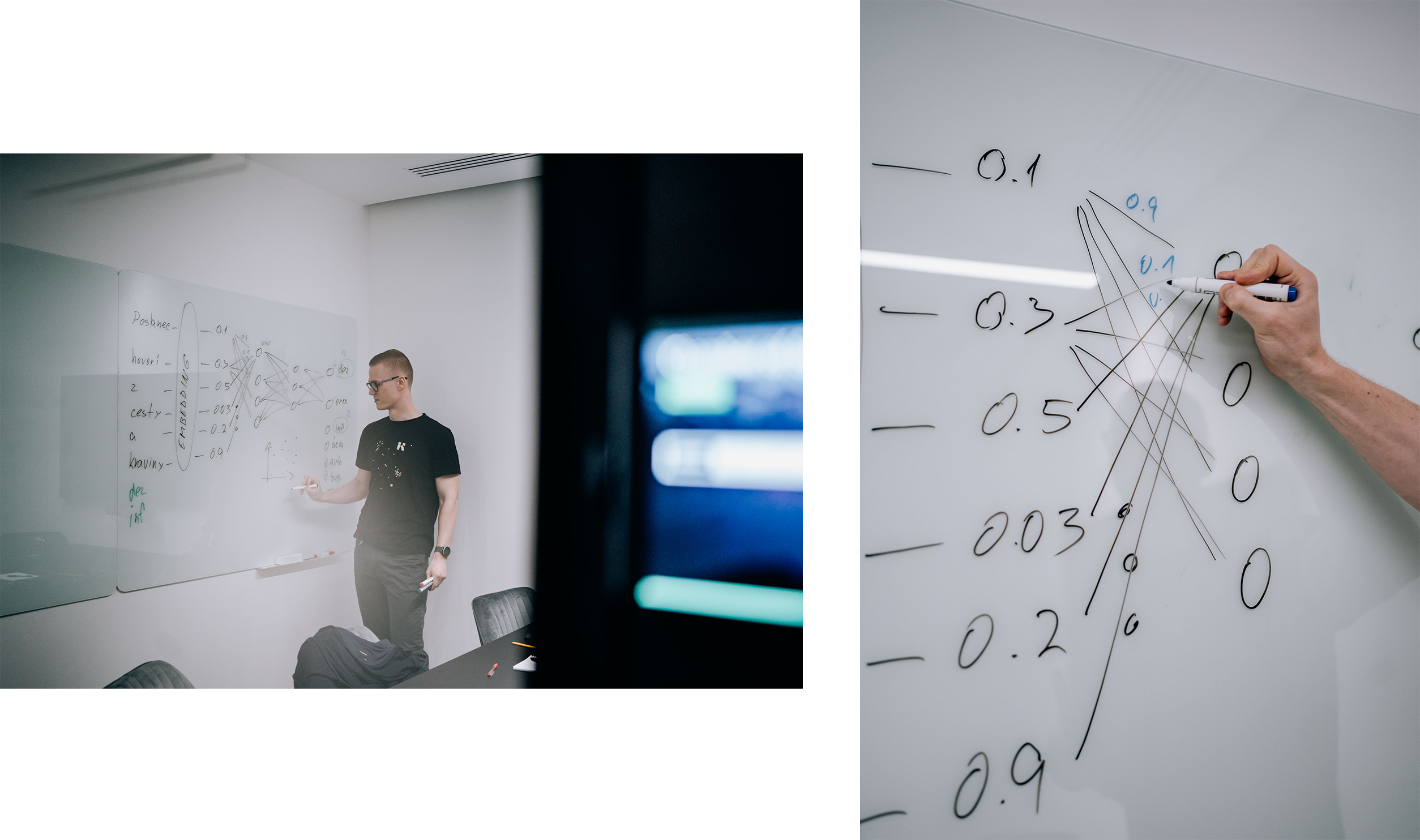

AI researcher Martin Tamajka: “If we are to trust AI in courtrooms, it needs to justify its decisions.”

AI is transforming jobs across a wide range of industries. However, there are still concerns about using it extensively when people’s lives or futures are at risk, such as in medicine or law. In these cases, it’s not enough for AI to just produce an answer – it also needs to be able to explain how it came up with that answer.

“If the algorithm can identify a patient as having dementia, but can’t explain what led to the diagnosis, the doctor will be unlikely to act upon it,” claims Martin Tamajka, a lead research engineer at the Kempelen Institute of Intelligent Technologies (KInIT).

To tackle this problem, he and other researchers at KInIT are conducting research in explainable AI (or “XAI” for short) – a branch of AI research that aims to provide an explanation of models’ “thought processes”. But how do they make sure a model’s explanations are understandable? Can explainable AI teach us more about the biases inherent in our society? And ultimately, can we always trust its explanations?

Martin, can you tell us what explainable AI is and how we can imagine it at work? Is it essentially a “ChatGPT” that can give you resources and reasoning?

First of all, let’s look at the ordinary “black box AI”. In this model you feed it data and train it for a specific task, for example: “tell me whether there is a dog or a fish in the picture”. We can see the input (the data you trained it with) and the output (the prediction), but we don’t exactly know what happened in between to generate the prediction.

Of course, we know the principle: in artificial neural networks, hundreds of thousands or even millions of nodes add or multiply the individual pixels to find structures characteristic of an image of a dog or a fish. In fact, they act as if they subsequently filtered the image – first, they detect simple structures like edges or blobs of different colours, and later they combine these structures into more abstract ones until they reach the final prediction.

However, we don’t know exactly what calculations and node combinations have led to that specific response – even though we can understand how multiplication and addition work, there are so many of them in this decision-making process that humans are not able to mentally contain them.

And this is where explainable AI could step in and show us its decision process?

Yes, explainable AI can show us what influences its decision-making. For example, in the dog/fish-image scenario, explainable AI could highlight the sets of pixels that have influenced its decisions the most. So, if the picture had a rectangular shape and a lot of water, it’s most likely a fish. Whereas if the picture had three big black blobs and big ears, it’s most likely a dog.

In this way, we could potentially verify whether the prediction of an AI system was based on relevant parts of the input data.

So, to answer your question about explainable AI being able to reason, then the answer is no – no reasoning, or at least not for now. Even though ChatGPT might in many cases provide a certain level of reasoning, it’s still far from being reliable. Many other AI models provide no reasoning at all. Hopefully, one day we will have mechanisms able to provide reliable and human-understandable explanations for at least the majority of models used in practice – that would be the holy grail of our work.

How is explainable AI used today, and when is it preferred to a black box approach?

Explainable AI is a cornerstone for a new field of applications, mostly in areas that have a high impact on the lives of individuals.

In my research, I have focused a lot on the use of artificial intelligence in medicine, and here, explainability is crucial. If our algorithm can identify a patient as having dementia, but can’t explain what led to the diagnosis, the doctor will be unlikely to act upon it. The tests necessary to confirm the diagnosis might be inconvenient or even harmful, as could be the potential treatment – so without a clear explanation of why the algorithm suggests the diagnosis, the whole thing has little value.

But certainly, XAI has a role to play in a variety of fields – such as self-driving cars, where it’s possible to track the decision-making process that led to an accident; finance, where AI can aid with loan approvals; and even in automated weapon systems, where it can explain the reasoning behind launching a strike (which can also prevent the strike from being performed, if the human operator detects faulty reasoning).

What about the justice system? Is AI actively used there?

AI “helpers” have been used in adjudication for decades. Since the early 2000s, the COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) algorithm developed by Northpointe, and many like it, have been used in the USA, advising judges by giving the defendant a “grade” of how likely they are to re-offend.

“We need to find the sweet spot between providing all the information that the AI system used to make its decision, and in just enough detail to reflect the accuracy of that decision, but without overwhelming the end-user receiving the explanation.”

Wisconsin vs. Loomis

In 2013, Eric Loomis was found driving a car that had been involved in a shooting. To determine his sentence, the judge used both his previous criminal record as well as an output from the COMPAS algorithm. He was classified as being at high risk of re-offending, and sentenced to six years.

COMPAS itself has faced controversy multiple times. Based on a study by Julia Dressel and Hany Farid from Dartmouth College, it was found to be working with a level of precision similar to the judgement of random strangers on the internet.

Here we can see the problems with black box AI directly, most prominently in the case of Wisconsin vs. Loomis, where the defendant appealed the ruling because he felt that the judge made the decision based on an algorithm whose workings were secretive and could not be checked or validated. He argued that this was unfair and went against his right to have a fair trial.

The appeal went up to the Wisconsin Supreme Court, who ultimately ruled against Loomis. Their ruling, however, urged caution in the use of algorithms. We mustn’t forget that even felons are still people with rights – and the right to an explanation of a ruling is one of them. You must be able to show what you have based your ruling on, and here, explainable AI can help considerably.

Explainability doesn’t automatically have to mean understanding. With different levels of education, age and familiarity, how do you make sure the algorithm’s explanations are understood by the recipient?

Explainability is all about walking the line between understandability and faithfulness.

Let’s take, for example, an explainable model similar to COMPAS. Like COMPAS, it would provide a recommendation on a prudent ruling, but it would also provide an explanation of why. But what should that explanation look like?

It could be complete and faithful, and simply provide a long text file with all the data of the convict, with each word being assigned a “weight” of how much it affected the final decision. That would be open and transparent, but not really helpful, as it would be very difficult for the judge to understand.

On the other hand, AI could “explain” via an understandable justification presented as a shorter text, e.g. I advised parole because it was a minor charge and the defendant showed remorse. The risk here is that even though this might be partly true, there might have been a lot of other important details that led to the final decision, which you still don’t know, so how “complete and faithful” is it really?

In other words, we need to find the sweet spot between providing all the information that the AI system used to make its decision, and in just enough detail to reflect the accuracy of that decision, but without overwhelming the end-user receiving the explanation.

Your research at KInIT is trying to tackle this part of explainability. Can you tell us more about how you approach it?

We are looking for ways to give you the best of both worlds. One branch of our research is creating a meta-algorithm that applies multiple explainability algorithms to your problem and finds the one that gives the most satisfactory explanation.

We call it AutoXAI – similar to how AutoML (Automated Machine Learning) tries to find the best machine learning model for the problem at hand, we try to find the best explanation for the problem with XAI algorithms.

Through optimisation, we want to find an XAI algorithm that balances the two components mentioned above – understandability and faithfulness. Basically, we define a set of explainability algorithms and their parameters and we generate explanations of predictions generated by the machine learning model. Then, the question is “Which XAI algorithm is better”?

“The algorithm that produces better explanations is the one we want to use for our machine-learning model and data. Therefore, we need to define a set of metrics that measure the extent to which the predictions are understandable and faithful. This is actually the most difficult part of the whole process, which leads to the ultimate question – what is actually a good explanation?”

To answer that question, we need to know how to measure the quality of explanations. Once we have that defined, we know that the algorithm that produces better explanations is the one we want to use for our machine-learning model and data. Therefore, we need to define a set of metrics that measure the extent to which the predictions are understandable and faithful. This is actually the most difficult part of the whole process, which leads to the ultimate question – what is actually a good explanation?

The answer is usually not simple and it depends on the problem. For example, in some situations, such as a picture of a dog, an explanation in the form of highlighted pixels that most influenced the prediction might be the right one. In other cases, a textual explanation is better.

Let’s go back to the courtroom. You mentioned that we need a balance between not being overwhelmed, but also getting a detailed enough explanation of the decision. Is there any way that judges could ask for either a more or less detailed explanation?

One way how this can be tackled is something similar to a “sensitivity slider”. A judge working on a case could ask the AI to give them a list of the ten most decisive factors for the suggested ruling. They can consider them, and if they need more granularity, just increase the sensitivity and see even more factors, which individually hold less weight but as a whole give a broader picture.

In other words, the judge can first look at the most significant argument for or against sending someone to jail, and then they can look at an even broader picture if needed.

This also applies to different levels of understanding. An explanation for a skilled judge might consist of 50 different factors, uncovering a high level of detail and nuance. While the same system can explain the case to a first-year law student by using just the three most important factors, summing up the gist of the case with the most hard-hitting evidence.

While on the subject of court rulings, let’s get to AI biases. There is mounting evidence that court rulings are biased toward certain groups of people. Can explainable AI help find those biases?

Of course, but it’s not that the AI will directly tell you there is something wrong. But by examining which attributes matter more than others in decision making, you can see the bias of the training data that was projected into the model… or indeed, the world.

There was a famous case where explainable AI was used to study how a machine-learning model classified different types of objects in pictures, including horses. They thought the algorithm worked brilliantly until they found out that instead of looking at important parts of the picture that should be relevant for the prediction, the algorithm just focused on the watermark. This led the model to implicitly create a rule like this – “if there is a watermark, say ‘horse’”.

“It’s not that the AI will directly tell you there is something wrong. But by examining which attributes matter more than others in decision making, you can see the bias of the training data… or indeed, the world.”

If you use an XAI algorithm on thousands of court-case final verdicts and the predictions of the AI model that served as recommendations for the judge, you can get a nice image of how the underlying AI model actually creates its decision. In this way, you can potentially discover biases present in the model.

To fix such biases you can clean your training data and retrain the model, adjust training strategy to explicitly punish the model when it makes a prediction based on some bias, or use another “de-biasing” procedure, such as deliberately including more of some specific types of rulings, or giving them more weight in the input.

Knowing is the first part, but we might also actively do something against the biases. Could XAI help fight that, and is that something you are aiming for?

There are, of course, many ethical problems, on which I am not an expert. But to simplify it, you can aim for two things: equality of opportunity, or equality of outcome.

Let’s steer away from justice for a moment, and consider an AI system admitting children to a school. If you aim for equality of opportunity, AI will choose the 100 best-scoring students to be admitted.

The upside is that you get the cleverest and most hard-working students – while everyone had the same opportunity to apply, only those with the best score were accepted. But if you look below the surface, you might find that most of those children are from wealthy families, as those children can more easily dedicate their time to studying, have the best tutors, etc.

If you find that unfair, you might be in the camp of equality of outcome. This means that instead of just looking at the scores, you include measures like gender, race, wealth, social strata, etc., and try to admit the best people from different groups.

What is great about this is that people who need education the most, have a shot at a better life. On the other hand, they might not be the best students for the opportunity. And other, more suitable students from other groups might have been left behind because there are only 100 admissions available.

“People have to learn to question AI. I believe that questioning it and not believing its outputs outright is what will, in the end, add to the acceptance of the technology.”

Which option is correct? I can’t tell you. I personally prefer equality of opportunity in most cases, but I also clearly see its downsides. If we want to change some pathology in our society, like increasing the education level of poor minorities in order to elevate these communities as a whole, the equality of outcome might be the right choice.

To make it even more complicated, we don’t always want to get rid of all the biases as some of them are wanted. For example, when sending out invitations for breast cancer screenings, we want AI to be biased towards women over 50. Because that is not a bad bias – it’s a statistical fact, that incidentally acts as a bias.

Recognising “right” and “wrong” biases, while right and wrong are painted with very broad brushstrokes, is a difficult task for ethicists, and its importance in the future will be immense.

Let’s finish up on the most important subject – responsibility. Who is responsible when the AI makes the decision? How do you think the future of responsibility for decisions will evolve?

I don’t believe we should let any kind of AI do important decision making in the foreseeable future. I see AI and humans as more like co-workers.

There are many things I would let AI do solely on its own. I will let it recommend me the best phone. The same goes for maybe 80–90% of the decision making in many professions. Let the AI do the tedious and repetitive work, so you have time and mental capacity to focus on the 10% of difficult cases.

Especially in critical decision making, a human should always have the last word. AI can be an advisor to a doctor, judge or soldier, but they must make the final call and weigh out all the pros and cons.

Let’s not forget that AI does make mistakes. It’s far from infallible. And it will never be!

There will always be errors, and people have to learn to question AI. I believe that questioning it and not believing its outputs outright is what will, in the end, add to the acceptance of the technology.

In these times, when we’re observing the growth of very powerful generative models such as ChatGPT or Midjourney, we will have to learn how to check the trustworthiness of many things on the web, in the news or on TV, as creating things like Deepfake will be easy.

So yes, we will always need humans to be making the final call and thinking critically about the outputs they get from the AI. Just as you don’t believe everything you find on Google (or I would like to believe so), you will learn to use your common sense when working with AI. In this way, it can help us in many areas while keeping the risks minimal.

Martin Tamajka has been working with different forms of AI in high-responsibility domains since his student years. In the past, he focused on the analysis of multidimensional medical images and image analysis in general. He now works on deep learning and computer vision and aims to increase the transparency and reliability of neural networks through methods of explainability and interpretability.

AUTHOR: Andrej Kras

PHOTOS: Dominika Behúlová