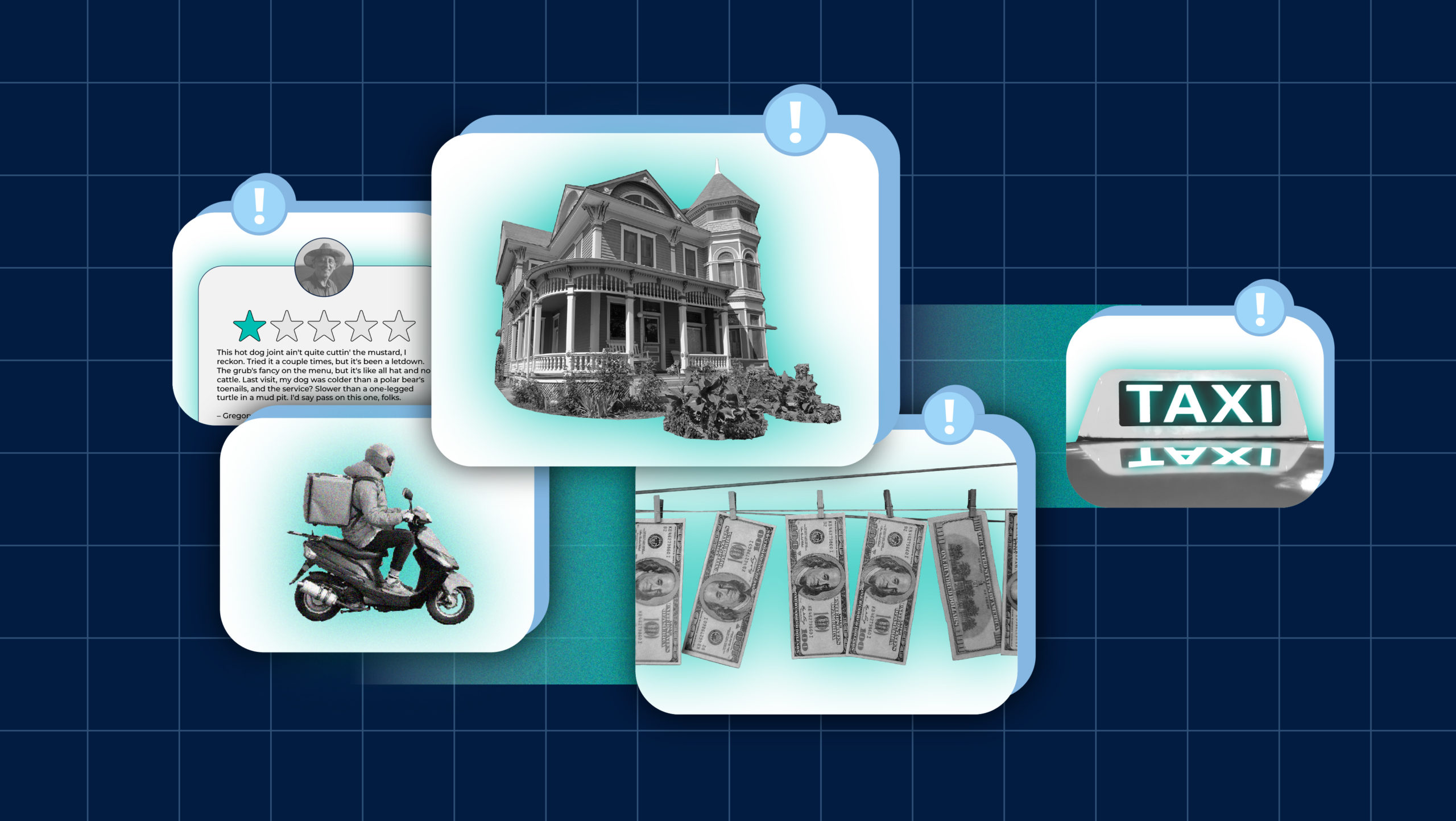

Five threats in sharing economy apps and how biometrics could help fight back

Sharing economy platforms such as Uber or Airbnb should always have control over who is using the platform and how. However, in some cases, fraudsters and criminals are able to take advantage of sharing economy services and put the safety of users and the reputation of the platforms at serious risk. Read about five threats and scams that have occurred on sharing economy platforms and how the use of biometric technology could have prevented them.

Too good to be true: counterfeit listings on holiday rental apps

Imagine booking a splendid seaside villa for your dream holiday, only to arrive at the provided address and find nothing but a dilapidated building – and that’s if you’re lucky. Just as with any other aspect of our lives, counterfeit listings and fraud can infiltrate short-term rental platforms, too.

The issues in these cases can vary from properties that don’t quite match the descriptions to the grim possibility of them not existing at all. In some instances accounts are hijacked or cloned, resulting in properties being rented out without the owners’ consent or knowledge.

Red flags are usually numerous in counterfeit listings. Typically, these listings prompt users to conduct transactions outside the platform, often via direct bank transfers, making refunds challenging. A glaring warning sign is when accommodations appear too dreamy for the modest price tags attached.

Biometric verification – an effective tool for platforms against fraudsters

Platforms are continuously working to eliminate fake listings, lately employing AI technology to expedite the process of identifying and removing such listings. Usually, this involves requesting more actions from the owners of suspicious accounts and listings, such as taking new photos of the accommodation directly through the app and providing a live GPS location.

Biometric onboarding can be a powerful tool, especially in preventing fake listings from being created and existing accounts from being compromised. It enhances security on home rental platforms by requiring potential hosts to verify their identity through unique biological markers, such as fingerprints or facial recognition, via their smartphones or computers. Additionally, on-site biometric guest verification can protect hosts from guests hiding under false identities or potential squatters.

Dial D for Delivery: fraud in online food ordering

The online food-delivery world often falls prey to low-value scams and occasional opportunists. The relatively modest order values and the urgency to fulfill orders rapidly create a fertile ground for deception. The most infamous entry-level scam is “leave at the side door”. A user places an order for food, placing a “leave at the side door” post-it on their door, then disposes of the “evidence” and seeks a refund, claiming that the delivery person left nothing at the doorstep. But the world of food delivery also hides more risky, albeit quite rare, scams. Among them is the impersonation of delivery personnel.

In this dark scenario, criminals sign up as delivery drivers using a counterfeit account with falsified IDs, or hijacking a poorly protected account. They accept an order through the official app, only to cancel it after picking up the customer’s meal from the restaurant. Then, they show up at the customer’s address, food in hand, and claim that the app malfunctioned, leading to the cancellation of the order and payment. They then present a point-of-sale (POS) system, prompting customers to pay in person to receive their food. If the customer falls into this trap, they face risks such as being charged significantly more than agreed upon to having their credit card data stolen and traded among fraudsters on the dark web.

The dark web

A little bit of everything can be found on the dark web: weapons, drugs, passwords – including compromised accounts on sharing economy platforms. Interestingly, hacked accounts often fetch a higher price compared to credit card information. The reason behind this is the sometimes sluggish response of platforms, which provides criminals with a larger window of opportunity to cash in.

Biometric verification for delivery staff

Applying biometric technology at the consumer’s end might seem unnecessary in a world where the stakes are often low, such as in food delivery. However, when it comes to the impersonation of delivery drivers or the hijacking of accounts, the potential harm to both customers and restaurants is far from trivial.

Biometric verification technology ensures that the users are who they say they are – preserving the trust between customers, restaurants and the platform. It helps by verifying the identity of the delivery drivers, preventing unauthorised individuals from trying to falsely represent the restaurant or scam the clients during the delivery process. Biometric verification also acts as a barrier against creating fake restaurants or manipulating menu items and prices to deceive customers.

Is this a real star? Fake reviews on sharing platforms

How many stars does it get? Whether picking a restaurant, a handyman or a second-hand dress, reviews on sharing platforms are the compass guiding our choices. Studies tell us that around 80% of users find their way to digital platforms by reading online reviews, especially relative newcomers, such as those who only ventured into online ordering and peer-to-peer services during the pandemic.

Yet, the mechanism is broken if hundreds or even thousands of fake reviews start to contaminate the platforms’ environment. Fraudulent endorsements serve various purposes. They can lend an air of credibility to fake listings, duping unsuspecting users into scams, or they can distort competition by artificially boosting a shop, restaurant or user’s reputation. Conversely, negative fake reviews have the power to tarnish a profile’s reputation beyond repair. Here we enter the world of the so-called “review farms”, where underpaid employees or bots generate fraudulent reviews in droves.

Fake reviews are particularly harmful on the most open platforms, where virtually anyone can share their views without concluding a transaction. In contrast, many platforms restrict reviews to post-transaction assessments, theoretically raising the cost of creating a fake review and dissuading its use. However, even these platforms aren’t entirely immune, as fake accounts, fake transactions or compromised accounts can come into play.

“Imagine if every online reviewer had to log in and verify their identity with a selfie. Probably many review farms, where underpaid employees or bots generate fraudulent reviews in droves, would go bust.”

Keeping fake reviews under control, thanks to biometrics

The battle to stop the spread of fake reviews has been ongoing for years. This has involved the reevaluation of state regulations and legal frameworks alongside the tireless efforts of platforms to identify and remove fraudulent reviews, using both human intervention and artificial intelligence. In this fight, biometrics could tip the balance in favour of honesty. Imagine if every reviewer had to log in and verify their identity with a selfie. Probably many review farms would go bust.

Dangerous lifts: assaults in ridesharing

As popular and convenient as ridesharing services are, there’s one challenge that companies can’t afford to ignore: ensuring a safe environment for both drivers and passengers. While 99.9% of rides in the US ended without a safety incident in 2019, reports from ridesharing companies also unfortunately listed around 4,000 sexual assaults. Platforms inevitably reflect broader societal issues, and no facet of public life or industry is immune to such incidents, yet the numbers cannot be swept under the rug.

Biometric verification of drivers and passengers

Several proactive measures could be taken to prevent assaults. These could include equipping vehicles with robust Plexiglass barriers between the driver and passengers, installing at least one camera for extra surveillance, and integrating a “panic button” to immediately call an emergency number and provide precise location information. Biometrics can also provide an additional layer of preventive protection.

Biometric verification can act as a deterrent to physical violence as it makes takeover attempts or fake drivers impersonating someone else much more difficult. A reliable and powerful biometric remote identity verification system enables the ride-hailing apps to safely verify the identity of each driver at any point in time. The verification process works as follows:

- any driver can receive a notification at any given moment asking them to safely stop and take a selfie,

- the selfie is then compared to the driver’s ID,

- at the same time, the system performs a liveness check which ensures that the system is dealing with actual people, not fake images.

Biometric onboarding for sharing economies

The selfie to ID verification and liveness check is part of biometric onboarding with Innovatrics’ digital onboarding tool DOT, which is a software that enables facial registration, extracts data from IDs, performs facial comparisons and verifies the liveness of each registered driver.

Find out more about DOT here.

Cleaning dirty money,

the new way

Criminals have also learned to exploit the new ecosystem of the sharing economy for their illicit gains. One novel avenue they’ve ventured down involves using the peer-to-peer ecosystem to launder money or drain stolen credit cards.

Here’s how the operation typically unfolds. A partner in crime is hired on the dark web: a fake driver or peer-to-peer accommodation host. But instead of hosting an actual guest or giving an actual ride, the host or the driver takes payment from a fake guest who never intends to show up. Once the money is processed through the platform, the driver or host refunds a portion of the payment back to the criminal. In other cases, the same person might hold two accounts and move money from the dirty to the clean account using fake peer-to-peer service transactions. And just like that, the money is laundered.

Avoid fake rides and stays with biometrics

Biometrics comes to the rescue in two significant ways. First, biometric onboarding at the sign-up stage of a platform keeps fake accounts at bay and thwarts the hijacking of real ones. In the future, the same technology could be used in loco to validate whether the services – be it a car ride or an accommodation booking – actually occur, confirming the involvement of genuine individuals in these transactions.

AUTHOR: Giovanni Blandino

IMAGES: Shutterstock

Sources

- Inside the War on Fake Consumer Reviews

- Airbnb is cracking down on fake listings. The platform has removed 59,000 so far this year

- Scammers impersonate delivery service support to rip off drivers and restaurants

- Uber’s US Safety Report

- Lyft’s Community Safety Report

- The Dark Side of Delicious: Decoding Food Delivery Fraud on the Dark Web